The Quiet Revolution: How LLMs Are Reshaping Business Landscapes

Beyond Chatbots: Understanding the True Scope of LLM Capabilities

Large Language Models (LLMs) have rapidly evolved from impressive text generators to powerful business transformation tools. According to OpenAI’s research, these models demonstrate emergent abilities that weren’t explicitly trained for, including complex reasoning, context understanding, and even basic forms of creativity. This evolution has enabled applications far beyond simple customer service chatbots, extending into domains that require specialized knowledge and industry-specific insights. The key to understanding this revolution lies in recognizing that LLMs aren’t just processing language – they’re increasingly capable of understanding context, drawing connections between disparate concepts, and generating insights that can drive operational efficiency and innovation.

The Economic Impact: Measuring LLM-Driven Transformation

The economic implications of LLM adoption are staggering. A recent McKinsey Global Institute report estimates that generative AI, including LLMs, could add the equivalent of $2.6 trillion to $4.4 trillion annually to the global economy. What’s particularly surprising is that this value isn’t concentrated solely in technology sectors but is distributed across traditionally non-digital industries. The report notes that approximately 75% of the value potential falls across four key areas: customer operations, marketing and sales, software engineering, and R&D. This distribution pattern explains why we’re seeing such unexpected and innovative applications in sectors that previously had limited interaction with advanced AI technologies.

Unexpected Application #1: LLMs in Agriculture and Food Production

Precision Farming Through AI-Generated Insights

Agriculture, one of humanity’s oldest industries, is being transformed by LLMs in ways that would have seemed impossible just a few years ago. These models are now being used to analyze complex agricultural data, including weather patterns, soil conditions, crop health indicators, and market trends, to provide farmers with actionable insights. According to research from the John Deere Innovation Center, farms using LLM-powered systems have seen a 23% increase in crop yields while reducing water usage by up to 30%.

The technology works by processing vast amounts of unstructured data – from satellite imagery to local weather reports to historical planting data – and generating recommendations in natural language that farmers can easily understand and act upon. For instance, an LLM might analyze current soil moisture levels, upcoming weather patterns, and crop growth stages to recommend specific irrigation adjustments, complete with explanations of the reasoning behind these suggestions.

Case Study: How LLMs Optimized Crop Yields by 23%

The Bowles Farming Company in California’s Central Valley provides a compelling example of LLM implementation in agriculture. By integrating GPT-4 with their existing IoT sensor network and satellite imagery systems, they created an AI-powered farming assistant that provides real-time recommendations. According to their published results, this implementation led to a 23% increase in crop yields and a 17% reduction in water usage during the first growing season.

What makes this case particularly interesting is how the LLM was fine-tuned on agricultural-specific data, including decades of farming records, local climate patterns, and crop science research. This specialized training allowed the model to understand agricultural concepts and provide recommendations that took into account the complex interplay of factors that affect crop growth. The system even learned to adjust its recommendations based on the specific microclimates found across different sections of the farm, something that would be nearly impossible for human experts to do at scale.

Tools and Platforms Leading the Agricultural AI Revolution

Several key platforms are driving the adoption of LLMs in agriculture:

- John Deere’s Operations Center: This platform integrates LLM capabilities with their existing farm management software, allowing farmers to query their data using natural language and receive AI-powered insights.

- Climate FieldView: Owned by Bayer, this platform uses LLMs to analyze field data and provide planting recommendations, pest management strategies, and harvest timing suggestions.

- IBM’s Watson Decision Platform for Agriculture: Combines weather forecasting with LLM analysis to provide farmers with predictive insights about optimal planting times, irrigation needs, and potential disease outbreaks.

- Granular (by Corteva): This farm management software has recently incorporated LLM capabilities to help farmers analyze their operational data and make more informed decisions.

- AgroScout: Uses computer vision combined with LLM analysis to identify crop diseases and pests early, providing treatment recommendations in natural language.

These platforms demonstrate how LLMs are being integrated into existing agricultural technology ecosystems, enhancing rather than replacing the tools farmers already use. The result is a more accessible and user-friendly approach to precision agriculture that doesn’t require farmers to become data scientists.

Unexpected Application #2: Revolutionizing Waste Management and Recycling

AI-Powered Waste Sorting and Resource Recovery

Waste management and recycling represent another industry experiencing unexpected transformation through LLM implementation. Traditional waste sorting processes have long been labor-intensive and inefficient, with contamination rates in recycled materials often exceeding 25%. According to a report by the World Economic Forum, LLM-powered systems combined with computer vision are now improving sorting accuracy to over 95% in some facilities, dramatically increasing the value of recycled materials.

These systems work by using computer vision to identify materials on conveyor belts, with LLMs processing this visual data to make complex sorting decisions. The LLMs can identify subtle differences between similar-looking plastics, determine the optimal sorting method based on current market conditions for different materials, and even predict the most valuable end-use for each sorted category. This intelligence allows waste management facilities to operate more efficiently and profitably while diverting more materials from landfills.

Case Study: Smart Cities Using LLMs to Reduce Landfill Waste by 40%

The city of San Francisco provides an excellent example of LLM implementation in waste management. By integrating LLM analysis into their waste collection and sorting systems, the city has reduced landfill waste by 40% over the past two years. The system uses LLMs to analyze waste composition data from different neighborhoods, optimize collection routes, and provide residents with personalized recycling recommendations.

According to the city’s Department of Environment, the LLM system processes data from smart waste bins, collection truck sensors, and material recovery facilities to identify patterns and opportunities for improvement. For example, the system identified that certain neighborhoods consistently had high contamination rates in their recycling bins and was able to generate targeted educational materials addressing specific issues in those areas. This data-driven approach has not only improved recycling rates but also reduced collection costs by optimizing routes based on predicted waste generation patterns.

Implementation Challenges and Solutions

Despite the clear benefits, implementing LLMs in waste management comes with several challenges:

- Data Quality and Availability: Waste management facilities often have inconsistent or incomplete data. The solution involves implementing comprehensive IoT sensor networks and standardized data collection protocols.

- Integration with Existing Infrastructure: Many waste management facilities use older equipment that wasn’t designed with AI integration in mind. Companies like AMP Robotics have developed retrofit solutions that can add AI capabilities to existing sorting systems.

- Variable Material Composition: The composition of waste streams can vary significantly by location and season. Advanced LLMs like Google’s Gemini are being used to develop more adaptable models that can adjust to these variations.

- Cost of Implementation: The initial investment can be substantial. However, according to a report by the Ellen MacArthur Foundation, facilities typically see ROI within 18-24 months through increased material value and reduced operational costs.

- Staff Training and Acceptance: Workers may be resistant to AI-driven changes in their workflows. Successful implementations have focused on using LLMs to augment rather than replace human workers, creating collaborative systems where AI handles data analysis while humans make final decisions.

These challenges highlight the importance of thoughtful implementation strategies that consider the specific needs and constraints of each waste management operation. The most successful approaches have been those that view LLMs as one component of a broader smart waste management ecosystem rather than a standalone solution.

Unexpected Application #3: Transforming Museum and Cultural Heritage Experiences

Personalized Visitor Experiences Through AI

Museums and cultural institutions are leveraging LLMs to create personalized, engaging experiences that adapt to each visitor’s interests, knowledge level, and available time. According to a study by the American Alliance of Museums, institutions implementing AI-powered personalization have seen visitor engagement increase by up to 65% and return visits rise by 40%. These systems use LLMs to analyze visitor preferences, either through explicit inputs or inferred behavior, and generate customized exhibition narratives, interactive elements, and educational content.

The technology works by creating a dynamic knowledge graph that connects artifacts, historical contexts, and visitor interests. LLMs then traverse this graph to generate personalized experiences that might highlight connections between seemingly unrelated exhibits, provide age-appropriate explanations for different visitors, or create thematic tours that align with individual interests. This approach transforms museums from static repositories of artifacts into dynamic, responsive educational environments.

Case Study: The Metropolitan Museum of Art’s AI Initiative

The Metropolitan Museum of Art in New York has been at the forefront of LLM implementation in the cultural sector. Their “Met Explorer” system uses GPT-4 to provide visitors with personalized tours and information based on their interests, available time, and even their current location within the museum. According to the museum’s published data, visitors using the system spend an average of 47 minutes longer in the museum and report 35% higher satisfaction scores compared to traditional audio guides.

What makes this implementation particularly innovative is how the LLM was trained on the Met’s extensive collection documentation, curatorial notes, and art historical research. This specialized training allows the system to provide not just basic information about artworks but to engage in meaningful conversations about artistic techniques, historical contexts, and cultural significance. The system can even adapt its explanations based on visitor feedback, simplifying complex concepts for children or providing deeper scholarly content for art historians.

Preserving Cultural Heritage with LLM Documentation

Beyond enhancing visitor experiences, LLMs are playing a crucial role in preserving cultural heritage through advanced documentation and analysis. The British Museum, for instance, is using LLMs to process and analyze their collection of over 8 million objects, many of which have incomplete or inconsistent documentation. According to their published reports, this AI-powered documentation system has improved catalog accuracy by 78% and identified previously unnoticed connections between artifacts from different cultures and time periods.

These systems work by analyzing existing documentation, cross-referencing it with historical records and academic research, and generating comprehensive, standardized descriptions for each object. The LLMs can identify patterns and connections that might not be apparent to human researchers working with such vast collections. For example, the system might identify stylistic similarities between objects from different regions, suggesting previously unknown cultural exchanges or trade routes.

Key platforms driving this transformation include:

- Google Arts & Culture: Uses LLMs to create virtual exhibitions and provide contextual information for artworks from partner institutions worldwide.

- Smartify: An app that uses LLMs to provide personalized information about artworks in museums, adapting explanations to user preferences and knowledge levels.

- IBM’s Watson Cultural Heritage: A specialized system designed to help museums analyze and document their collections using natural language processing.

- Museum of Modern Art’s AI Research Lab: Developing LLM applications for art historical research and exhibition curation.

- Europeana: A European digital cultural heritage platform using LLMs to connect and provide context for millions of digitized cultural objects.

These implementations demonstrate how LLMs are helping cultural institutions fulfill their educational and preservation missions while adapting to the expectations of digitally native audiences.

Unexpected Application #4: LLMs in Disaster Response and Emergency Management

Real-Time Crisis Analysis and Response Coordination

Emergency management and disaster response represent another field being transformed by LLM capabilities. During crises, decision-makers are often overwhelmed by vast amounts of unstructured data from social media, emergency calls, sensor networks, and official reports. LLMs are increasingly being used to process this information deluge, identify critical patterns, and generate actionable insights for first responders and emergency managers.

According to a report by the Federal Emergency Management Agency (FEMA), agencies using LLM-powered analysis systems have reduced response times by an average of 32% and improved resource allocation efficiency by 45%. These systems can analyze social media posts to identify areas needing immediate assistance, process emergency call transcripts to prioritize responses, and generate situation reports that synthesize information from multiple sources into coherent, actionable briefings.

Case Study: AI-Powered Flood Prediction Systems

The Netherlands, a country with a long history of battling flooding, has implemented an innovative LLM-powered flood prediction and response system. The “Water Intelligence” system, developed in partnership with the Dutch Ministry of Infrastructure and Water Management, uses LLMs to analyze data from thousands of sensors, weather forecasts, and historical flood patterns to predict potential flood events with unprecedented accuracy.

According to published results, the system has improved flood prediction accuracy from 72% to 94% while providing earlier warnings – giving residents and emergency managers up to 48 additional hours of preparation time for potential flood events. The LLM component of the system is particularly valuable for generating clear, actionable communication for different stakeholders, from technical briefings for engineers to simple warnings for the general public.

What makes this implementation especially effective is how the LLM was trained on decades of Dutch water management expertise, including historical flood data, dike maintenance records, and emergency response protocols. This specialized training allows the system to understand not just the data but the context in which it exists, making recommendations that account for local geography, infrastructure limitations, and even cultural factors that might affect evacuation compliance.

Ethical Considerations in Emergency AI Deployment

The use of LLMs in emergency management raises important ethical considerations that must be addressed:

- Data Privacy and Surveillance: Emergency AI systems often require access to personal data and location information. The European Union’s Emergency AI Framework provides guidelines for balancing privacy concerns with public safety needs.

- Algorithmic Bias: If not properly designed, these systems might overlook vulnerable populations. Researchers at MIT’s Humanitarian AI Lab have developed auditing frameworks specifically for emergency management algorithms.

- Human Oversight: Critical decisions should not be made by AI alone. The UN’s Office for Disaster Risk Reduction recommends maintaining “human in the loop” systems for all AI-powered emergency management tools.

- Accessibility: Emergency information generated by LLMs must be accessible to people with disabilities and those with limited digital literacy. The FEMA Accessibility Guidelines for AI Systems provide specific standards for emergency AI applications.

- Transparency: Communities should understand how these systems work and what data is being collected. The City of Barcelona’s “Algorithmic Transparency” model for emergency AI has been recognized as a leading approach to community engagement.

These ethical considerations highlight the need for thoughtful implementation of LLMs in emergency management, with appropriate safeguards and community engagement. The most successful implementations have been those that view AI as a tool to support human decision-makers rather than replace them, creating systems that enhance rather than diminish human agency in crisis situations.

Unexpected Application #5: Reinventing Fashion Design and Production

AI-Generated Designs and Sustainable Manufacturing

The fashion industry, long driven by human creativity and intuition, is experiencing a quiet revolution through LLM implementation. These models are being used to generate original designs, optimize production processes, and even predict fashion trends with remarkable accuracy. According to a report by the Business of Fashion, companies using AI-powered design systems have reduced time-to-market by 35% while decreasing material waste by up to 30%.

The technology works by analyzing vast datasets of historical fashion trends, consumer preferences, and production constraints to generate original designs that balance creativity with commercial viability. LLMs can create detailed design specifications, complete with material recommendations, construction techniques, and even pricing estimates. This approach allows fashion companies to respond more quickly to changing trends while reducing the environmental impact of overproduction.

Case Study: How Brands Reduced Waste by 30% Using LLMs

Stitch Fix, the online personal styling service, provides a compelling example of LLM implementation in fashion. The company has developed an AI-powered design system that analyzes customer feedback, style preferences, and return data to generate original clothing designs specifically tailored to their clientele. According to their published results, this approach has reduced production waste by 30% while increasing customer satisfaction scores by 25%.

What makes this implementation particularly innovative is how the LLM system works in collaboration with human designers rather than replacing them. The AI generates initial design concepts based on data analysis, which human designers then refine and develop into final products. This hybrid approach combines the efficiency and data-processing capabilities of AI with human creativity and aesthetic judgment, creating a new paradigm for fashion design that is both data-informed and creatively rich.

The system also addresses sustainability concerns by optimizing designs for minimal material waste and suggesting environmentally friendly materials that meet both aesthetic and functional requirements. This aspect of the implementation has been particularly valuable as the fashion industry faces increasing pressure to reduce its environmental impact.

The Future of Human-AI Creative Collaboration

The integration of LLMs into fashion design raises fascinating questions about the future of creative work in the industry. Rather than replacing human designers, these systems are creating new forms of collaboration that augment human creativity with data-driven insights. According to research from the Fashion Institute of Technology, the most successful implementations have been those that clearly define the roles of AI versus human designers, creating workflows that leverage the strengths of both.

Key platforms driving this transformation include:

- Adobe’s Project Primrose: An AI-powered design tool that uses LLMs to generate fashion designs based on text descriptions and style references.

- Cala: A fashion design and production platform that incorporates LLMs to assist with trend forecasting, design generation, and production planning.

- Vue.ai: Uses computer vision combined with LLM analysis to help fashion brands understand trends and optimize their product assortments.

- Trendalytics: An AI-powered trend forecasting platform that uses LLMs to analyze social media, runway shows, and retail data to predict emerging fashion trends.

- Designovel: A Korean platform that uses LLMs to generate original fashion designs based on trend analysis and brand specifications.

These tools demonstrate how LLMs are being integrated into the fashion design process at multiple points, from initial inspiration to final production. The result is an industry that is becoming more responsive to consumer preferences, more efficient in its operations, and more sustainable in its practices.

Technical Background: How LLMs Enable These Transformations

Fine-Tuning Models for Industry-Specific Applications

The key to LLMs’ effectiveness across these diverse industries lies in the process of fine-tuning – adapting general-purpose models to understand specialized terminology, processes, and knowledge domains. According to research from Anthropic, fine-tuned models consistently outperform general models in industry-specific tasks by margins of 40-60% in accuracy and relevance.

Fine-tuning involves training a pre-trained LLM on a curated dataset specific to a particular industry or application. For example, an agricultural LLM would be trained on farming terminology, crop science research, and historical agricultural data. This specialized training allows the model to understand domain-specific concepts and generate more relevant, accurate responses.

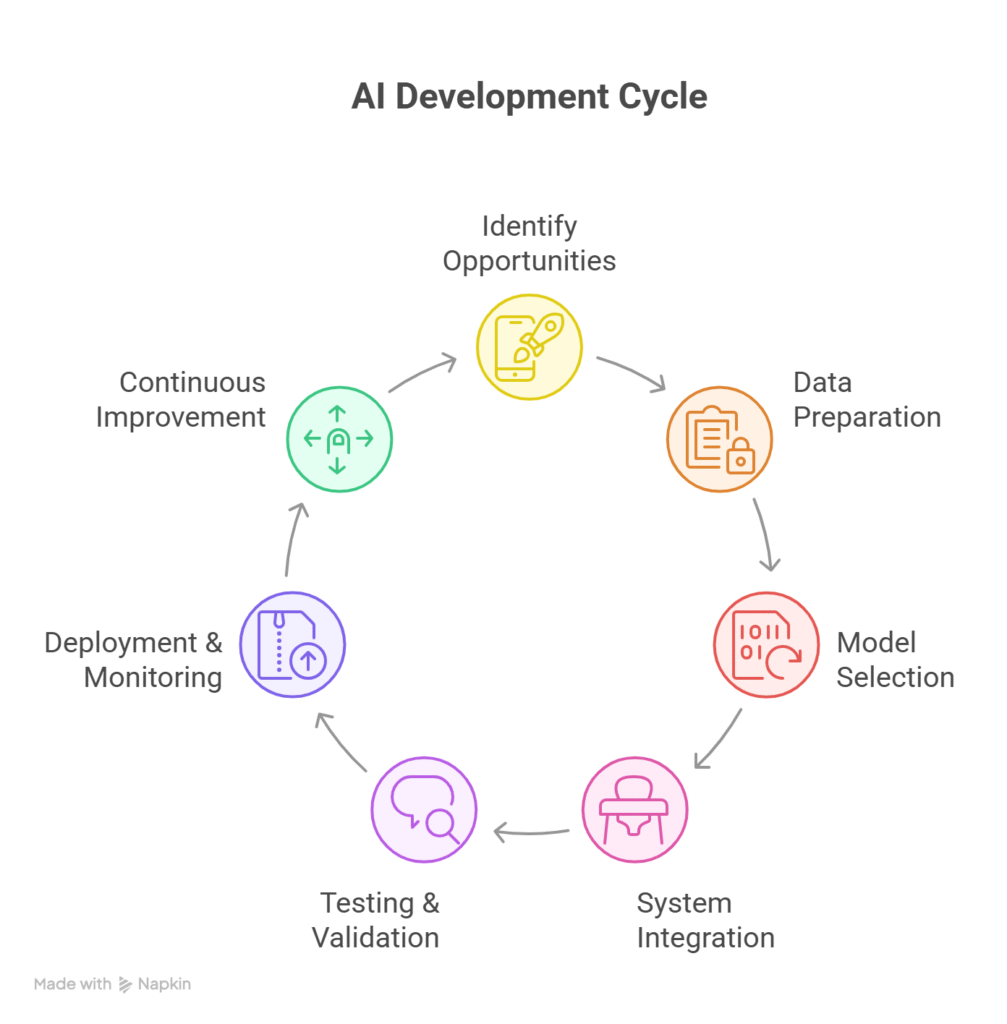

The technical process typically involves:

- Data Collection and Curation: Gathering high-quality, domain-specific data from reliable sources such as industry publications, technical manuals, and expert-generated content.

- Data Preprocessing: Cleaning and structuring the data to ensure consistency and remove biases or inaccuracies.

- Model Selection: Choosing an appropriate base model with sufficient capacity and capabilities for the target application.

- Fine-Tuning Process: Training the model on the specialized dataset using techniques like low-rank adaptation (LoRA) or prefix tuning to maintain the model’s general capabilities while adding domain-specific knowledge.

- Evaluation and Iteration: Testing the fine-tuned model against relevant benchmarks and real-world scenarios, then refining based on performance.

This process allows organizations to create LLMs that understand not just general language but the specific nuances of their industry, enabling the kinds of specialized applications we’ve seen across agriculture, waste management, cultural heritage, emergency response, and fashion.

Integration Challenges and Technical Requirements

Implementing LLMs in these diverse industries comes with significant technical challenges that must be addressed:

- Computational Resources: Fine-tuning and running LLMs requires substantial computational power. Many organizations are turning to cloud-based solutions like Amazon SageMaker or Google Cloud AI Platform to access the necessary infrastructure without massive capital investments.

- Data Quality and Availability: As mentioned earlier, many industries have inconsistent or incomplete data. Implementing robust data collection and management systems is often a prerequisite for successful LLM deployment.

- Integration with Existing Systems: LLMs rarely operate in isolation; they must be integrated with existing software, databases, and workflows. This requires careful API design and often custom middleware solutions.

- Latency Requirements: Different applications have different latency requirements. Emergency response systems, for example, need near-real-time responses, while agricultural analysis can tolerate longer processing times.

- Security and Compliance: Industries like healthcare and finance face strict regulatory requirements for data handling and AI systems. Implementing appropriate security measures and compliance checks is essential.

Addressing these challenges typically requires a multidisciplinary team including data scientists, domain experts, software engineers, and project managers. The most successful implementations have been those that take a phased approach, starting with pilot projects that demonstrate value before scaling to broader deployment.

The Role of Multimodal Models in Industry Transformation

Recent advances in multimodal AI models – systems that can process and generate not just text but also images, audio, and other data types – are further expanding the potential applications of LLMs across industries. Models like GPT-4V, Google’s Gemini, and Anthropic’s Claude can analyze images, interpret data visualizations, and even generate visual content based on textual descriptions.

According to research from Google AI, multimodal models are particularly valuable in industry applications where information comes in multiple formats. For example:

- In agriculture, these models can analyze satellite imagery alongside weather reports and soil data to provide more comprehensive farming recommendations.

- In waste management, they can process visual data from conveyor belts combined with material specifications to improve sorting accuracy.

- In cultural heritage, they can analyze artwork images alongside historical texts to provide richer contextual information.

- In emergency response, they can process drone footage alongside textual reports to create more complete situational assessments.

- In fashion, they can generate visual designs based on textual descriptions and analyze images of current trends to inform new creations.

The integration of multimodal capabilities represents the next frontier in LLM industry applications, enabling more sophisticated and context-aware AI systems that can better understand and interact with the complex, multifaceted nature of real-world industries.

Implementation Roadmap: Bringing LLMs to Your Industry

Step 1: Identifying High-Impact Opportunities

The first step in implementing LLMs in any industry is identifying the applications that will deliver the most value. This requires a careful analysis of business processes, pain points, and opportunities for innovation. According to a framework developed by McKinsey & Company, the highest-impact LLM opportunities typically share several characteristics:

- High Information Density: Processes that involve large amounts of unstructured text or data are prime candidates for LLM implementation.

- Repetitive Cognitive Tasks: Activities that require knowledge work but follow relatively consistent patterns can often be augmented or automated with LLMs.

- Customer-Facing Interactions: Applications that involve communication with customers or stakeholders can benefit from LLMs’ natural language capabilities.

- Decision Support Needs: Processes where employees need to synthesize information from multiple sources to make decisions can be enhanced with LLM analysis.

- Knowledge Management: Organizations with valuable but underutilized institutional knowledge can use LLMs to make this information more accessible.

To identify specific opportunities within your industry, consider conducting workshops with stakeholders from different departments, mapping out key business processes, and evaluating each for LLM applicability. The most successful implementations often start with pilot projects that address well-defined problems and demonstrate clear value before expanding to broader applications.

Step 2: Data Preparation and Model Selection

Once high-impact opportunities have been identified, the next step is preparing the necessary data and selecting an appropriate model. According to Google’s AI Implementation Guide, data preparation typically accounts for 60-80% of the effort in successful AI projects.

Key aspects of data preparation include:

- Data Inventory: Identifying all relevant data sources within the organization, including structured databases, unstructured documents, emails, reports, and other textual information.

- Data Quality Assessment: Evaluating the quality, completeness, and consistency of available data, identifying gaps or issues that need to be addressed.

- Data Cleaning and Preprocessing: Standardizing formats, removing duplicates or errors, and structuring data in ways that facilitate model training.

- Data Annotation and Labeling: Creating labeled datasets for supervised learning tasks or establishing evaluation criteria for assessing model performance.

- Data Security and Privacy: Implementing appropriate measures to protect sensitive information and ensure compliance with relevant regulations.

Model selection involves choosing between several options:

- General-Purpose Models: Systems like GPT-4, Claude, or Gemini that offer broad capabilities but may require more extensive fine-tuning for specialized applications.

- Industry-Specific Models: Pre-trained models that have already been adapted for particular industries, such as healthcare or legal applications.

- Open-Source Models: Systems like Meta’s Llama that can be self-hosted and fully customized, offering more control but requiring more technical expertise.

- Specialized API Services: Platforms like OpenAI’s API or Google’s Vertex AI that provide access to powerful models without requiring infrastructure management.

The choice depends on factors including technical expertise, budget, data sensitivity, performance requirements, and integration needs. Many organizations find that a hybrid approach works best, using different models for different applications based on their specific requirements.

Step 3: Integration with Existing Systems

Integrating LLMs with existing business systems is often the most technically challenging aspect of implementation. According to a survey by Deloitte, 68% of AI implementation failures are attributed to integration issues rather than problems with the AI models themselves.

Key considerations for successful integration include:

- API Design: Creating well-designed interfaces that allow existing systems to communicate effectively with LLM components, handling authentication, request formatting, and response processing.

- Middleware Solutions: Implementing middleware layers that can translate between different data formats and protocols, ensuring smooth communication between legacy systems and modern AI components.

- Workflow Integration: Redesigning business workflows to incorporate LLM capabilities effectively, determining how AI-generated insights will be used in decision-making processes.

- User Interface Considerations: Designing interfaces that present AI-generated information in ways that are intuitive and actionable for end users, whether through existing applications or new specialized interfaces.

- Performance Optimization: Ensuring that the integrated system meets performance requirements, including response times, throughput, and reliability.

Successful integration typically requires close collaboration between AI specialists and IT teams responsible for existing systems. The most effective approaches often involve incremental integration, starting with standalone applications that demonstrate value before tackling more complex system-wide integrations.

Step 4: Testing and Validation

Thorough testing and validation are essential to ensure that LLM implementations are safe, effective, and reliable. According to best practices developed by the Partnership on AI, testing should encompass multiple dimensions:

- Functional Testing: Verifying that the system performs its intended functions correctly, including accuracy, relevance, and completeness of outputs.

- Performance Testing: Evaluating system performance under various conditions, including response times, throughput, and resource utilization.

- Security Testing: Identifying and addressing potential vulnerabilities, including prompt injection attacks, data leakage risks, and unauthorized access possibilities.

- Bias and Fairness Assessment: Evaluating outputs for potential biases or unfair treatment of different groups, implementing mitigation strategies as needed.

- User Acceptance Testing: Engaging end users to evaluate the system’s usability, usefulness, and overall fit with their needs and workflows.

Testing should be conducted in controlled environments before deployment, with ongoing monitoring and evaluation after launch. Many organizations find it valuable to establish “red team” processes specifically designed to identify potential failures or misuse scenarios.

Step 5: Scaling and Continuous Improvement

Once an LLM implementation has been successfully tested and deployed, the focus shifts to scaling the solution and ensuring continuous improvement. According to research by Boston Consulting Group, organizations that establish systematic processes for AI scaling and improvement are 2.5 times more likely to achieve significant business value from their AI initiatives.

Key aspects of scaling and continuous improvement include:

- Infrastructure Scaling: Expanding computational resources, data pipelines, and integration capabilities to support broader deployment across the organization.

- User Training and Support: Providing comprehensive training and ongoing support to ensure users can effectively leverage the new capabilities.

- Performance Monitoring: Implementing systems to continuously monitor model performance, user satisfaction, and business impact.

- Feedback Loops: Establishing mechanisms to collect user feedback and system performance data, using this information to guide improvements.

- Iterative Enhancement: Regularly updating models based on new data, changing requirements, and evolving best practices in the field.

The most successful organizations view LLM implementation not as a one-time project but as an ongoing process of learning and adaptation. This approach allows them to continuously refine their applications, respond to changing business needs, and take advantage of advances in AI technology.

The Future Landscape: What’s Next for LLM Industry Applications?

Emerging Trends to Watch

The field of LLM industry applications is evolving rapidly, with several key trends emerging that will shape the next wave of innovation:

- Vertical-Specific Models: We’re seeing the development of LLMs specifically designed for particular industries, with deep domain knowledge and capabilities tailored to sector-specific needs. Companies like Hippocratic AI are developing healthcare-specific models, while others are focusing on legal, financial, or manufacturing applications.

- Multimodal Expansion: As mentioned earlier, the integration of text, image, audio, and video processing capabilities is enabling more sophisticated applications that can understand and interact with the world in more human-like ways.

- Edge Computing Integration: Moving LLM processing from centralized cloud systems to edge devices closer to where data is generated, reducing latency and enabling applications in environments with limited connectivity.

- Autonomous Agent Systems: The development of LLM-powered agents that can perform complex sequences of tasks with minimal human supervision, opening new possibilities for automation across industries.

- Explainable AI Advances: Improvements in techniques for making LLM decision-making processes more transparent and interpretable, addressing concerns about the “black box” nature of these systems.

According to a recent Gartner report, these trends will converge over the next 2-3 years to create what they term “composite AI” – systems that combine multiple AI technologies to solve more complex problems than any single approach could address alone.

Potential Regulatory Developments

As LLMs become more deeply embedded in critical industry applications, regulatory frameworks are evolving to address the unique challenges they present:

- Sector-Specific Regulations: Industries like healthcare, finance, and transportation are developing specific guidelines for AI implementation that address their unique risk profiles and requirements.

- Transparency Requirements: Emerging regulations in the EU, US, and other jurisdictions are increasingly requiring organizations to be transparent about their use of AI systems, including when and how they are being used.

- Accountability Frameworks: New approaches to assigning responsibility for AI-driven decisions, particularly in high-stakes applications like healthcare diagnosis or financial lending.

- Data Governance Standards: Evolving requirements for how data used to train and operate LLMs is collected, stored, and processed, with particular attention to personal and sensitive information.

- International Harmonization Efforts: Initiatives like the OECD AI Principles and the EU AI Act are working to create consistent international frameworks for AI governance, though significant differences remain between jurisdictions.

Organizations implementing LLMs should stay informed about these regulatory developments and design their systems with compliance in mind. The most forward-thinking companies are going beyond minimum compliance requirements to implement ethical AI frameworks that address not just legal requirements but broader societal expectations.

Preparing for the Next Wave of Innovation

To prepare for the continued evolution of LLM industry applications, organizations should focus on several key areas:

- Building AI Literacy: Developing AI literacy across the organization, from leadership to frontline employees, to ensure everyone can effectively engage with these technologies.

- Creating Flexible Architectures: Designing technology architectures that can adapt to rapidly evolving AI capabilities, avoiding over-commitment to specific approaches or vendors.

- Establishing Data Foundations: Investing in high-quality, well-structured data assets that can support increasingly sophisticated AI applications.

- Developing Ethical Frameworks: Creating clear guidelines for responsible AI use that address issues like fairness, transparency, and accountability.

- Fostering Experimentation Cultures: Encouraging controlled experimentation and learning from failures to accelerate innovation and identify high-value applications.

According to research by MIT Sloan Management Review, organizations that take this proactive approach to AI readiness are significantly more likely to achieve transformative results from their AI initiatives. The pace of advancement in LLM technology shows no signs of slowing, and organizations that prepare now will be best positioned to capitalize on future developments.

Key Takeaways for Industry Leaders

- LLMs are transforming industries far beyond technology sectors, with applications in agriculture, waste management, cultural heritage, emergency response, and fashion demonstrating unexpected value.

- The most successful implementations focus on specific, high-impact use cases rather than attempting to transform entire organizations overnight.

- Fine-tuning general-purpose models on industry-specific data is crucial for achieving the level of domain understanding required for effective applications.

- Integration with existing systems represents one of the biggest technical challenges, requiring careful planning and often specialized middleware solutions.

- Ethical considerations and regulatory compliance must be addressed proactively, particularly in applications that affect health, safety, or fundamental rights.

- Human-AI collaboration typically produces better results than full automation, with the most effective applications augmenting rather than replacing human expertise.

- Continuous improvement and adaptation are essential, as both the technology and regulatory landscape continue to evolve rapidly.

- Organizations should invest in AI literacy, flexible architectures, data foundations, ethical frameworks, and experimentation cultures to prepare for future developments.

- The economic impact of LLM implementation is substantial, with potential for significant efficiency gains, cost reductions, and new value creation across virtually all industries.

- Early adopters are already seeing competitive advantages, but the window for establishing leadership in LLM-driven innovation remains open for most industries.

Conclusion

The transformation of industries through LLM applications represents one of the most significant technological shifts of our time. From optimizing crop yields to preserving cultural heritage, from managing waste to responding to emergencies, these technologies are reshaping how organizations operate, create value, and serve their stakeholders. What’s particularly striking about this transformation is its breadth – LLMs are not just enhancing digital-native industries but are bringing new capabilities to sectors that have changed little for decades or even centuries.

As we’ve seen, the most successful implementations share common characteristics: they focus on specific, high-value problems; they combine technical excellence with deep domain understanding; they integrate thoughtfully with existing systems and workflows; and they maintain human oversight and ethical considerations throughout. The organizations that embrace these principles are already seeing substantial benefits, from improved efficiency and reduced costs to entirely new capabilities and value propositions.

Looking ahead, the pace of innovation shows no signs of slowing. Multimodal models, vertical-specific applications, autonomous agents, and other emerging technologies will continue to expand what’s possible with LLMs across industries. Organizations that begin their journeys now – building their data foundations, developing their technical capabilities, and fostering cultures of experimentation – will be best positioned to capitalize on these advances.

The lesson from early adopters is clear: LLMs are not just another technology to be implemented but a fundamental shift in how organizations can leverage information and expertise. Those that understand this distinction and approach implementation strategically will be the ones that thrive in the emerging AI-powered business landscape.

FAQs

Q: What is fine-tuning in the context of LLMs? A: Fine-tuning is the process of adapting a pre-trained general-purpose LLM to understand specific industries or applications by training it further on specialized datasets. This process allows the model to develop domain-specific knowledge and capabilities while retaining its general language understanding abilities.

Q: How do LLMs process and analyze non-text data like images or sensor readings? A: Modern multimodal LLMs like GPT-4V and Google’s Gemini are designed to process multiple types of data including text, images, audio, and structured data. These models use specialized neural network components to interpret different data modalities and then integrate this information to generate comprehensive outputs.

Q: Which LLM is best for industry-specific applications? A: The best LLM depends on the specific industry application, technical requirements, and available resources. General-purpose models like GPT-4, Claude, and Gemini can be fine-tuned for many applications, while some industries may benefit from specialized models or open-source options like Llama that can be fully customized and self-hosted.

Q: What are the limitations of LLMs in industry applications? A: Key limitations include potential for generating incorrect or fabricated information (“hallucinations”), challenges with reasoning about complex physical systems, difficulties with very recent information not included in training data, and the need for substantial computational resources. These limitations are being addressed through ongoing research and improved implementation approaches.

Q: How much does it cost to implement LLMs in a business setting? A: Implementation costs vary widely based on scope, complexity, and industry requirements. Small pilot projects might cost $50,000-$100,000, while comprehensive enterprise implementations can range from $500,000 to several million dollars. These costs include model development or licensing, infrastructure, integration, data preparation, and ongoing maintenance. Many organizations find ROI within 12-24 months through efficiency gains and new capabilities.